Pedro Páramo GPT

It only takes a few prompts with ChatGPT to understand its strengths and weaknesses. I was impressed to see how it could summarize Homer's Iliad in the form of a rap song. However, I was disappointed when it struggled to provide the right answers when asked specific questions about Juan Rulfo's Pedro Páramo. It began to fabricate details of the story, and despite multiple corrections, it couldn’t even acknowledge it didn’t know.

While we're aware that ChatGPT's knowledge isn't always reliable, it is worth exploring its abilities to summarize and analyze texts. Knowing that its training data might have included more information about Homer's works than Juan Rulfo's, I wondered if ChatGPT can adequately interpret a story if I provide it.

Furthermore, does ChatGPT truly know (and understand) the entire Iliad, or is it possible it just took a summary from somewhere and rewrote it as a rap song?

Artificial Neural Networks, the foundation of large models like ChatGPT, have been employed for years in image and sound processing with impressive results. But how can a technology trained to predict the next word in a sentence be used to interpret text and extract factual data?

With all this questions in my head I started experimenting with Large Language Models (LLMs).

Diving into LLMs

As someone intrigued by technology, I balance my fascination with a business-minded, pragmatic perspective, refusing to be swept up in the hype. Right from the beginning of my exploration into AI, I decided to move beyond just absorbing what others have been saying about it, opting instead to learn through hands-on experimentation.

I am also fascinated with literature (although I’ve never ready the Iliad, so I have to rely on that rap song ChatGPT made for me) so I decided to practice with one of my favourite novels, Pedro Páramo.

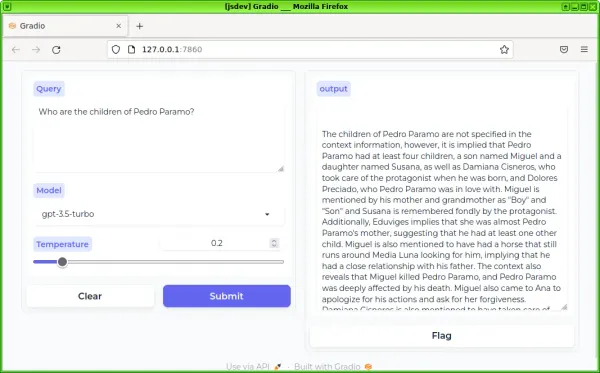

I've been familiarizing myself with AI technologies and I put together a Gradio App that would allow me to continue exploring GPT-4’s capabilities (the LLM behind ChatGPT) to get a sense of its capability.

I've learned a lot about the capabilities and limitations of GPT-4, as well as about other LLMs. I continue experimenting, and will be sharing my findings.

Pedro Páramo

Pedro Páramo is a novel by the Mexican writer Juan Rulfo written in 1956 and tells the story of Juan Preciado, who comes back to home town Comala, to meet his father, Pedro Páramo. He learns that his father had died and interacts with some inhabitants of the town, who later we find they are ghosts. There are different timelines in the story where we learn about Pedro Páramo. It’s a beautiful short story that has inspired many authors, you should give it a try.

To pay tribute to the story, I named my company, founded in 2005 and sold in 2022, "Comalatech." I also incorporated excerpts from the story into some of our marketing material.

My plan was to provide ChatGPT with the full novel and then pose questions about it, such as identifying the main characters, understanding their relationships, and discussing events throughout the story.

Dealing with Memory limitations

In LLMs, data is broken down into tokens, which roughly equate to words and punctuation. Due to their architecture and memory (context) limitations, handling an extended context would demand significantly more computational power, making real-time interactions both inefficient and impractical.

GPT-4’s maximum context is 32,768 tokens, but the version of Pedro Paramo I found is made of 46,860 tokens.

Simply breaking down the data in chunks and analyzing them separately doesn't work well, because stories need to be seen as a complete narrative, not just separate paragraphs. So, I shortened the story to fit within the 32K limit, cutting out certain sections and focusing my queries only on what I kept.

With the Gradio App ready, and the abridge version of Pedro Páramo, I was ready to start prompting GPT-4 about the story.

Prompting

SPOILER ALERT: I will be revealing key plot details of the story, so if you interested in the story itself, it may be a good idea to stop and read it. It is beautiful and only 120+ pages 😉

The app I created wraps the text of the story, some instructions (which I’ll explain later) and the query, in a single prompt and queries GPT-4.

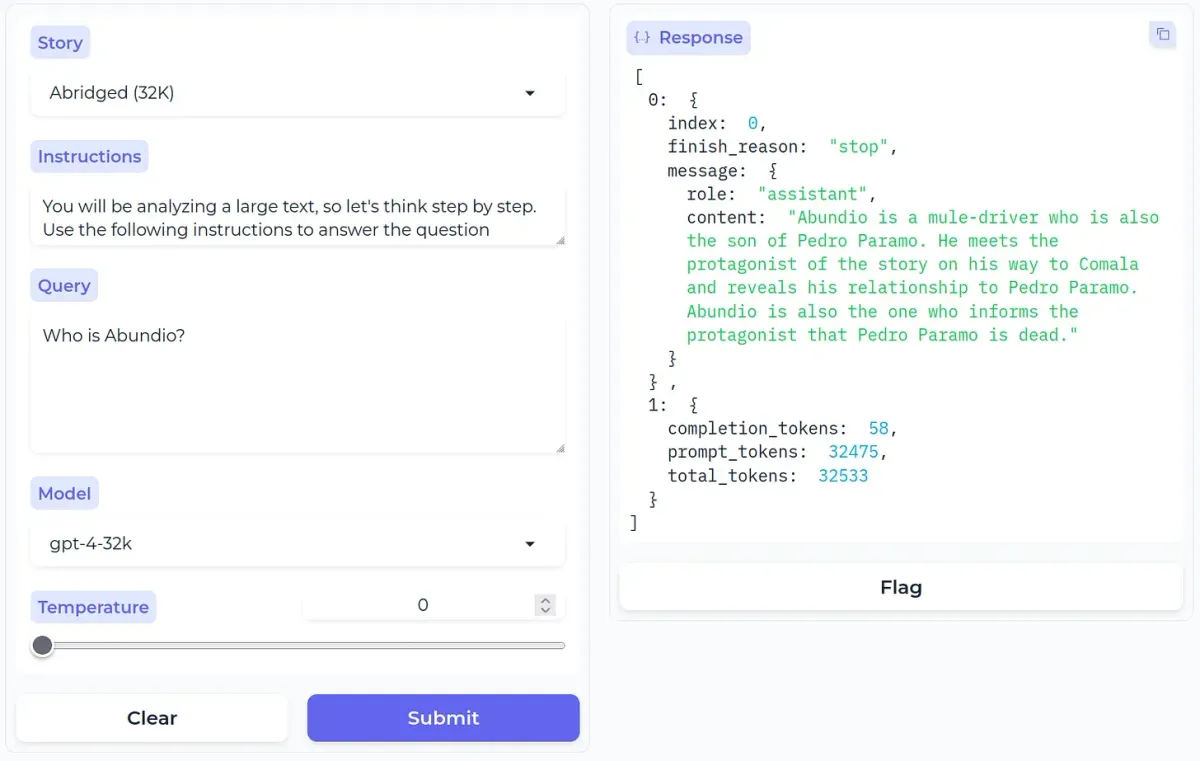

I started by prompting about Abundio, a character who is mentioned at the beginning, middle and at the very end of the story.

Who is Abundio?

This is the response I’ve got:

"Abundio is a mule-driver who is also the son of Pedro Paramo. He meets the protagonist of the story on his way to Comala and reveals his relationship to Pedro Paramo. Abundio is also the one who informs the protagonist that Pedro Paramo is dead." 🤖

That’s nice but it only includes what is mentioned at the beginning of the story. More details about him is provided at the middle, and at the very end, and as a matter of fact he happens to kill Pedro Páramo.

From the novel, this what we know about Abundio:

- He guides Juan Preciado to Comala, and reveals he is also a son of Pedro Páramo, and that Pedro Páramo had died

- Earlier in his life, he becomes deaf and stops speaking because of an accident

- We find out that we he met Juan Preciado, he had already died

- His wife Cuca, dies

- He sold his mules to pay for the treatment of Cuca

- He comes to Pedro Páramo’s home to ask for money for Cuca’s funeral, he is denied and kills Pedro Páramo

So how come it missed most of the details? Does it mean that GPT-4 is just too dumb to figure this out?

Not really. What happens is that GPT-4, like humans, needs some help figuring things out. Let’s remember that the basis of a LLM is the prediction of the next token in the response. If we only provide a large text and the question, it would try to do the best it can with the knowledge in natural language it has, which often is not enough.

But if we provide some guidance (i.e. tuning prompts) the it can adjust what is learning (i.e the neural network mapping the relationships between the tokens) and improve its answers.

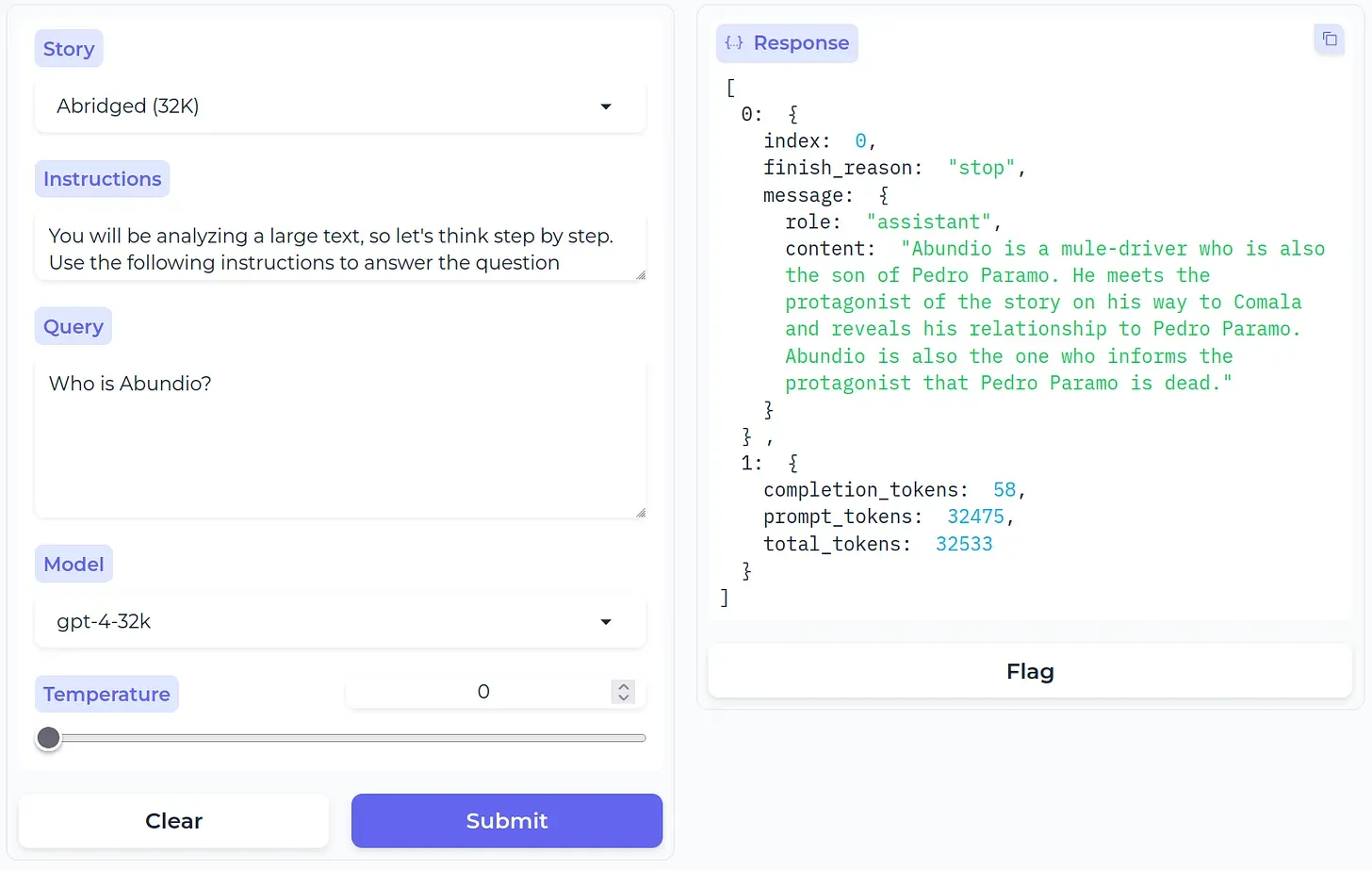

OpenAI provides a great guide to help proving the reliability of the prompts. After several hours of experimentation, I came up with the following instructions.

You will be analyzing a large text, so let's think step by step.

Use the following instructions to answer the question

1. Keep track of all the named characters in the story from the beginning to the end

2. Keep track of the family relationship between the character, specially siblings and parents

3. Keep track of the timelines of the story

4. Identify the relevant events in the story

5. Combine the different events and characters to answer the question

6. Explain the reasoning

7. Answer the question in a concise way, providing only information relevant to the questionSo, when making a query to ChatGPT (using the OpenAI API), I include the instruction at the beginning of the prompt, followed by the story and the specific question I'm asking.

So I try again.

Who is Abundio?

"Abundio is a mule-driver who is also the son of Pedro Paramo. He is the one who guides the protagonist to Comala and reveals his shared paternity with Pedro Paramo. He later becomes deaf and stops speaking after a firecracker accident. Abundio is also the one who ultimately kills Pedro Paramo." 🤖

This is much better… it missed some details, but this time, it caught the key fact that he killed Pedro Páramo… Let’s now try another character in the story.

Who is Miguel Páramo?

{… finish_reason: "content_filter"..}

🤖

Uh? What this actually means is that OpenAI found the content of the response as potentially harmful and it censored it… more or this later, but let’s move on to another question.

Who killed Miguel Páramo?

“Miguel Paramo, the son of Pedro Paramo, was killed in a horse riding accident. His horse, Colorado, was known to be wild and difficult to control. One night, Miguel made the horse jump over a stone wall to avoid a longer route. After the jump, Miguel continued riding but later realized that the town he was looking for, Comala, had disappeared. He returned to tell Eduviges Dyada, a woman he had a relationship with, about the incident. However, he was already dead by the time he reached her, having been killed by the fall from his horse.”

🤖

Now, this is impressive. It summarized almost perfectly what actually happened to Miguel Páramo, including how he came to tell Eduviges Dyada without knowing he was already dead.

But it got one detail wrong: the town he was looking for was Contla, not Comala, which is clearly stated in the paragraphs taking about his death.

But, how come it got everything right but a fundamental piece wrong?

What if we ask specifically what town was he looking for?

What town was Miguel Paramo heading to when he died?

"Miguel Paramo was heading to the town of Comala when he died."

🤖

Wrong again! What is actually written is that “He was going to see his girlfriend in a town called Contla“ which he couldn’t find.

Now let’s ask about who was he looking for.

Who was Miguel Paramo looking for when he died?

"Miguel Paramo was looking for his father, Pedro Paramo, when he died. His mother had told him on her deathbed to seek out his father in Comala and demand what was rightfully theirs. However, upon reaching Comala, Miguel learns from a woman named Eduviges that his father had died many years ago."

🤖

Oh dear! now it is confusing Miguel Páramo with Juan Preciado… and it was not Eduviges who told Juan Preciado that Pedro Páramo had died…

Let’s ask just slightly differently, changing ‘looking for’ with ‘going to see’ and see what happens.

Who was Miguel Paramo going to see when he died?

"Miguel Paramo was going to see his girlfriend in a town called Contla when he died. This is inferred from the story where it is mentioned that Miguel Paramo used to visit his girlfriend in Contla and on the night of his death, he was unable to find the town."

🤖

It finally gets right the town of Contla… 🤦♂️

Note also what a big difference makes simply changing who was he looking for with who was he going to see.

Why?

At times, it produces impressive responses that make you think it genuinely understands the topic. However, there are instances when it completely misses the point, overlooking obvious details.

I’ve been going back and forth from “Whoa!” to “seriously?” throughout my experimentation.

So, why?

I am probably going to get shot by those who actually know, but here’s my interpretation of how things work (my thoughts, with a little bit of help from ChatGPT to clarify):

LLMs work by breaking down the input into tokens, sequentially linked, associating each token with other related tokens with a ranking. This ranking is influenced by the strength of relationships between tokens, based on the model's training data and the input itself. The primary objective is to predict the next token in a sequence, which comes to us in the for of the answer.

So, how tokens are associated (the ranking, or how close or far they are) depend on the training data, and the input data itself, which in our example, it comes in the form of the the instructions, the story and the actual question.

The association (ranking) changes as it processes the tokens, including input and output (i.e. the predicted token).

And often, when facing with multiple options to choose from, the model relies on randomness: when it gets to a point with there are multiple potential tokens with similar rank, it just randomly chooses one.

Let’s look at the response about how Miguel Paramo died:

“Miguel Paramo, the son of Pedro Paramo, was killed in a horse riding accident. His horse, Colorado, was known to be wild and difficult to control. One night, Miguel made the horse jump over a stone wall to avoid a longer route. After the jump, Miguel continued riding but later realized that the town he was looking for, Comala, had disappeared…”

🤖

… and when we ask specifically where was he heading:

"Miguel Paramo was heading to the town of Comala when he died."

🤖

What happens is that not only “Comala” is mentioned throughout the story more frequently than “Contla”, but quite often is mentioned in the context of heading/looking for, from the very beginning.

So when it comes time to predict what comes after “Miguel Paramo was heading to the town of ….” Comala ranking is higher than Contla.

so what happens if we add more context, to tune the ranking of the associations?

What town was Miguel Paramo heading to when he died, Comala or Contla?

"Miguel Paramo was heading to Contla when he died. This is inferred from the conversation between Abundio and Pedro Paramo, where Abundio mentions that Miguel Paramo used to visit his girlfriend in Contla. Later, when Abundio recounts the death of Miguel, he mentions that Miguel couldn't find Contla, suggesting that was his intended destination."

🤖

This is correct… but the explanation is not accurate: it is not Abundio who mentions this but Eduviges Dyada. 🤦♂️

Conclusion

My objective with all this experimentation is to better understand how LLMs work, and I am making some progress.

If we want to prove that ChatGPT is great, we can find ways to do so, such as having it pass business, medical, or bar exams.

But does that mean it truly understands or that it is capable of reasoning? Or is it merely replicating patterns and algorithms without genuine understanding? If anything, we've learned what many of us already knew: passing an exam doesn't mean you truly understand the subject matter.

If we want to prove that ChatGPT is useless for real-life applications, all we need to do is test it in scenarios where 100% accuracy is required with even slightly complex data.

Justifying our skepticism is always easy, but identifying opportunities with new tools and technologies, as limited as they may be, is what I am after.

It's not about what it knows or how smart it is, but what we can do with its knowledge and capabilities. We shouldn't be looking for the perfect feature or tool, but rather seeking opportunities with the tools we have. For that, the same tried-and-true intelligence and creativity make all the difference.

Maybe ChatGPT is more about the "Whys" than the "Whats"?

I am continue exploring ways to improve accuracy using specific instructions and parameters to narrow down the responses, as well as evaluating other LLMs. I will share what I learn.

Dedicated to Sergio Sloseris Z”L, with whom I shared curiosity and skepticism about technology, and passion for Latinamerican culture.